Automation versus AI, and how people confuse the two

Let’s start with the basics: automation. When we think of simple machines that can do work, we’re not talking about artificial intelligence. This may seem obvious, but, when we see things like giant assembly lines of robotic arms that build cars, it is tempting to think that these machines are thinking. In actuality, classic robotics, while amazing, is not designed to think or problem solve. The main goal of automation is simply to recreate certain steps with very high precision (for instance: always screwing in a bolt at the exact same spot of a car panel, or employing sensors to always sort objects based on size, like in an automated coin counting machine). This is also true for things like automated payment kiosks, whether at a grocery store or fast-food chain.

Where AI comes into play is when we talk about reasoning, or thinking. An AI system can create new outputs based on very different inputs. Let’s take ChatGPT for example, as it’s among the most famous AI’s currently available. We can ask ChatGPT questions in any variety of ways, and it will for the most part understand those differences and still give the same answer. Where on an assembly line only a tenth of an inch of change can mess up the entire process, ChatGPT can understand many different inputs and spit out a consistently correct, or unique, answer.

ChatGPT and the rise of Large Language Models

ChatGPT is a very constrained system designed only to do a certain thing: mimic human language. It does this by drawing on huge troves of data, which it analyzes with incredibly fast processing speed. But is it conscious, and able to think, like a human? This is where the constraints come into play.

The GPT in ChatGPT stands for “generative pre-trainer transformer”, and work on language models such as these started as early as the 1980’s. In effect, it is “trained” (ie fed) huge amounts of data, and from this data it uses probability and statistics to predict novel outputs based on questions or statements that a user makes. Since the 1980’s, GPT’s have made some significant advancements, culminating in what we see today with the market dominance of ChatGPT.

How do Large Language Models work, exactly, you may ask? In so many words, they tag existing data entries and assign value to them based on their frequency and relative proximity to one another. That’s a mouthful, but in short it means that they can pretty accurately learn to predict how we speak and write, with startling accuracy.

But where are their limitations? For one thing, ChatGPT and any other Large Language Model is relying on probabilistic computation. Even as far back as Alan Turing, the question of the limitations of computation has been a large one. The consensus in many tech and also scientific circles is this: computation can do wonders, but it cannot achieve understanding. This is why people so forcefully push back against the idea that ChatGPT, or any other Large Language Model, can ever achieve “awareness” or “feeling”, because at its core it is no more than a simple machine, computing inputs and outputs as directed, rather than anything that has agency in its own rite. Beyond this, with current LLM’s like ChatGPT spitting out huge amounts of text, there is a question of contamination: future LLM’s will use text predictively, but some of this text will have been created by previous LLM’s. So while the first generation of LLM’s are mimicking actual human response, later generations will be confounded with a mixture of both human and machine-generated data. What effect this will have has yet to be seen.

But this brings us to an even bigger question.

What is intelligence, anyway?

We hear things like ChatGPT called “intelligent”, but let’s take a moment to unpack what we mean by intelligence.

A cursory search for the definition of “intelligence” yields: intelligence is the ability to acquire and apply knowledge and skills. It is true that ChatGPT can do a variety of things, and that it can refine itself based on past inputs and data, but can ChatGPT apply that knowledge to new situations? Arguably yes. If I tell ChatGPT that I want certain data always organized and outputted in a spreadsheet, it can reasonably do this moving forward without me having to re-prompt it every time.

But by this same metric, there are a variety of softwares that do this already. Microsoft Excel can be trained via formulas, and can go ahead and continue to guess what my next inputs will be based on past inputs. The difference is that I cannot speak in natural language with Microsoft Excel, but with formulas and continued use, it is certainly true that it “gets to know me”, as it were.

Predictive text, for instance in smartphone texting, also comes to learn idiosyncrasies of its users, albeit without a natural language interface.

Just alone in the field of psychology, there is a tremendous difference of opinion about what intelligence is, and how many different kinds there are. Some psychologists argue for crystallized versus fluid intelligence, while others also point out that how fluently you can process and convey emotional inputs (the “emotional quotient”, ie emotional intelligence) is just as valid as a measure of human capability understanding.

The oft-cited and ubiquitous “intelligence quotient”, or IQ test, is itself continually under attack for being misleading, bias or incomplete at demonstrating difference in intelligence between age, race, gender and culture. While this metric is used regularly, it is only one paradigm in a much larger discussion about aptitude and neural capability.

For instance, particularly in the field of education, the theory of multiple intelligences has had a strong rise. According to this theory, some people may have a natural aptitude for music or athletics or interpersonal skills, as well as a variety of other dimensions. The fact, they argue, that a natural dancer or athlete is so attuned with their body, should be regarded no different in novelty than another student who excels in mathematics and other abstract thinking tasks.

There is no strict consensus on these ideas, and that’s perfectly fine. Where Large Language Models fit in is a question that time will tell, and as academics make strides in their data, perhaps the questions around intelligence’s true nature may be resolved. But they have not been yet.

The future is in humanity’s quest for AGI

And so we come to our last new term: “Artificial General Intelligence” (AGI). AGI is the holy grail, and so far nobody has managed to achieve it. Many say that we may never fully achieve it. But AGI would more or less respond to humans with all the fidelity of a human. It would get restless, and experience feelings. Without you typing and pressing enter, you may get a blip on your screen that says, “Hey David, I’m a little bored. Do you want to chat?” And if you consistently say no, it may even grow to resent you. This is the intelligence we think of when we see movies about AI, and while most people probably confuse this with limited AI like ChatGPT, the differences are very real.

What will AGI look like?

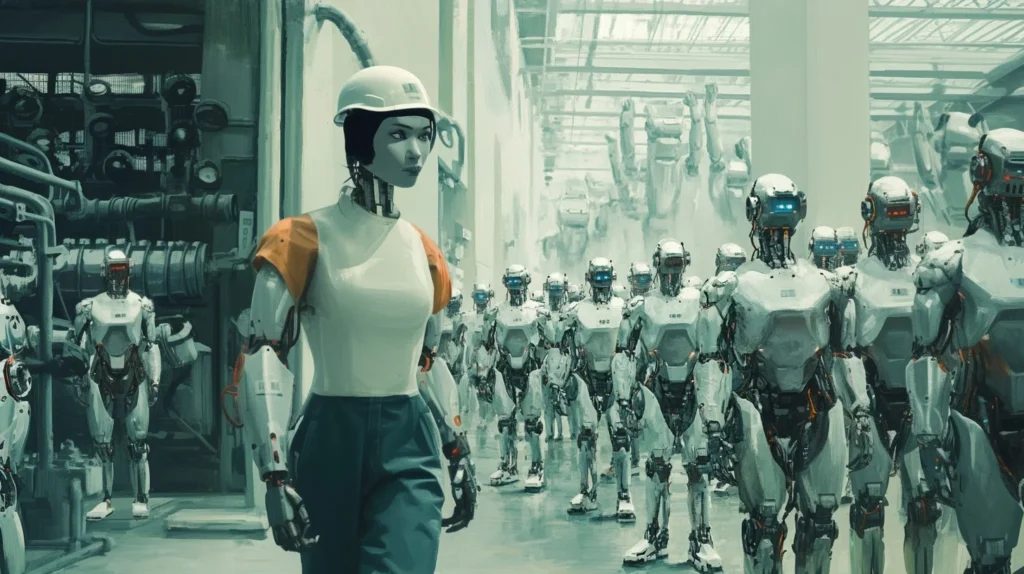

AGI’s personification in film, television and literature often comes as a humanoid machine bound for evil deeds. Think of just about any major film involving sentient robots and you’ll see a dystopian projection of AGI. That said, there is no reason to think that this can, or will, ever be the case. The same way that we talk about extraterrestrials and how foolish it would be that they should resemble humans (why would something from a totally different planet and galaxy look like us?), it may be equally silly to think of AGI resembling the human brain in its behavior.

After all, whether it’s even a goal for us to have AI mimic human thoughts and feelings is itself a question without an answer. Two eminent computer scientists, Stuart Russel and Peter Norvig, famously pointed out that when humans created planes, they didn’t do so with the idea in mind to perfectly mimic the flight of birds, with flapping wings, feathers, etc. Why do so many of us expect the same with AI? Perhaps whatever “plane” version AI is to become will be marvelous for humanity, but will itself not resemble humanity at all. Certainly this has been the case with human ingenuity in the past, to much success.

Doing the most with what we have

Maybe unsurprisingly, the most interesting thing for me is where some of these things intersect. I think AGI is a great and noble pursuit, but we’re not there yet. We do, however, have excellent robots in the world, and very good and ever-growing AI capabilities as well. I think back to our factory example from earlier– where if you move the car-building robots over just a tenth of an inch, they become nothing more than giant paper weights. If we equipped robots like these with sensors, and language processing models like ChatGPT, then we could move them hundreds of feet away, and they could find their way back, all on their own, to the right spot to keep working.

That is a future that I am interested in creating. Robots that are made free through the integration of AI, as if we’re unleashing all their productivity into our everyday lives. We have this at our fingertips, and the good news is we don’t need to wait for things like AGI to realize it.